AI Is Fueling a New Wave of Fraud

In the time it takes to read this paragraph, another generative AI Fraud has most likely been attempted. Every compliance officer today faces a startling reality: fraud is no longer just digital, it is intelligently automated. In 2026, fraud schemes powered by generative artificial intelligence are not fringe threats; they are mainstream risk vectors that are reshaping how criminals deceive institutions and customers.

Criminals now use AI to generate authentic-sounding phishing emails, create convincing fake identities, and even produce deepfake voices or videos that impersonate trusted individuals or executives. These tools make scams faster, more scalable, and alarmingly believable while requiring less technical skill from fraudsters than ever before.

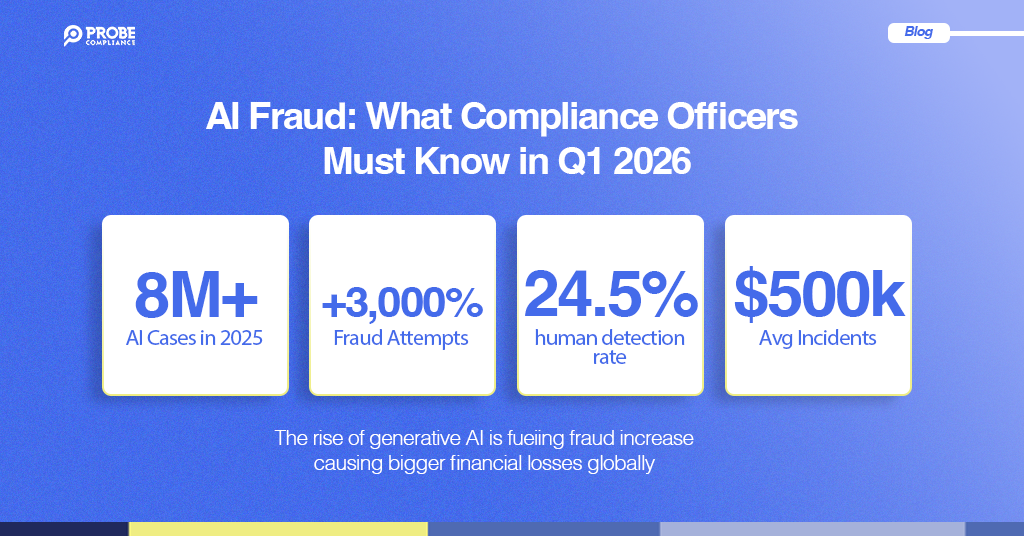

Recent data on AI-generated deepfakes shows explosive growth in fraudulent content. Reports indicate a rise from around 500,000 files in 2023 to over 8 million by 2025, with fraud attempts surging by as much as 3,000%.

This is not limited to obscure schemes. AI fraud now affects everything from financial services to large-scale social engineering attacks.

The Scale and Sophistication of Generative AI-Driven Fraud

The sophistication of AI fraud means traditional compliance safeguards are becoming obsolete. Here are several examples that show how these threats unfold:

- Synthetic identities: AI can combine stolen and fabricated data to create fake personas that pass basic verification checks, making account opening and loan fraud harder to detect.

- Deepfake audio scams: Fraudsters clone voices to impersonate family members or executives, tricking victims into sending funds. Cases like this have been documented across major regions around the world.

- Automated social engineering: AI tools generate thousands of personalized phishing messages within minutes, far surpassing human capabilities.

- Regulatory blind spots: Many existing compliance rules were written before the rise of generative AI, leaving institutions struggling to interpret legacy frameworks for these new attack vectors.

At the same time, AI is already a vital part of the fight against fraud. AI is starting to be adopted as a defence in Africa, with major banks and financial institutions engaging AI-powered solutions like Probe Compliance for fraud detection and customer verification in markets such as South Africa, Kenya, and Nigeria.

This dual role, where AI enables fraud while also detecting it, places compliance teams in the center of a fast-moving technological arms race.

What Compliance Officers Must Know and Do in 2026

To stay ahead, compliance leaders must recognize that AI is not simply a tool, it is a battlefield. Here is what that means in practice:

1. Move Beyond Static Rules to Real-Time Behavioral Intelligence

Traditional rule-based systems only flag what they expect. Modern AI can model normal behavior and detect subtle anomalies in real time, revealing fraud attempts humans might overlook.

Compliance teams should:

- Adopt continuous monitoring systems or solutions powered by machine learning.

- Integrate behavioral analytics across all transactional channels.

2. Treat Data Governance as a Core Fraud Control Strategy

AI thrives on data, but poor data quality or weak access controls create opportunities for fraud to infiltrate compliance systems. High-quality, well-governed data improves both fraud detection and regulatory compliance outcomes.

3. Deepen Collaboration Between Fraud, IT, and Compliance Units

AI-driven fraud does not respect organizational boundaries. Compliance officers must collaborate closely with cybersecurity, IT, and data science teams to build robust, cross-functional defense systems.

4. Educate and Equip Staff for AI-Era Threats

From governance teams to investigators, staff must receive training on:

- AI threat vectors like deepfakes, synthetic identities, and voice scams

- Standardized playbooks for escalation and incident response

5. Leverage AI Responsibly with Explainability and Oversight

Using AI for fraud detection is essential, but compliance must ensure these tools are explainable, auditable, and aligned with ethical and regulatory standards. This transparency strengthens trust with regulators and minimizes exposure to legal and reputational risks.

AI fraud is no longer a distant threat, it is today’s reality. Compliance leaders who understand, anticipate, and proactively counter these risks will not just survive, they will define what responsible, future-ready compliance looks like.

Partner with Probe Compliance to stay ahead of emerging fraud trends, enhance detection systems, and strengthen your AML/CFT strategy through AI-powered intelligence built for Africa’s regulatory realities.

Explore Our Proven Solutions → Probecompliance.com